More autonomy through smart environmental sensors and AI cameras

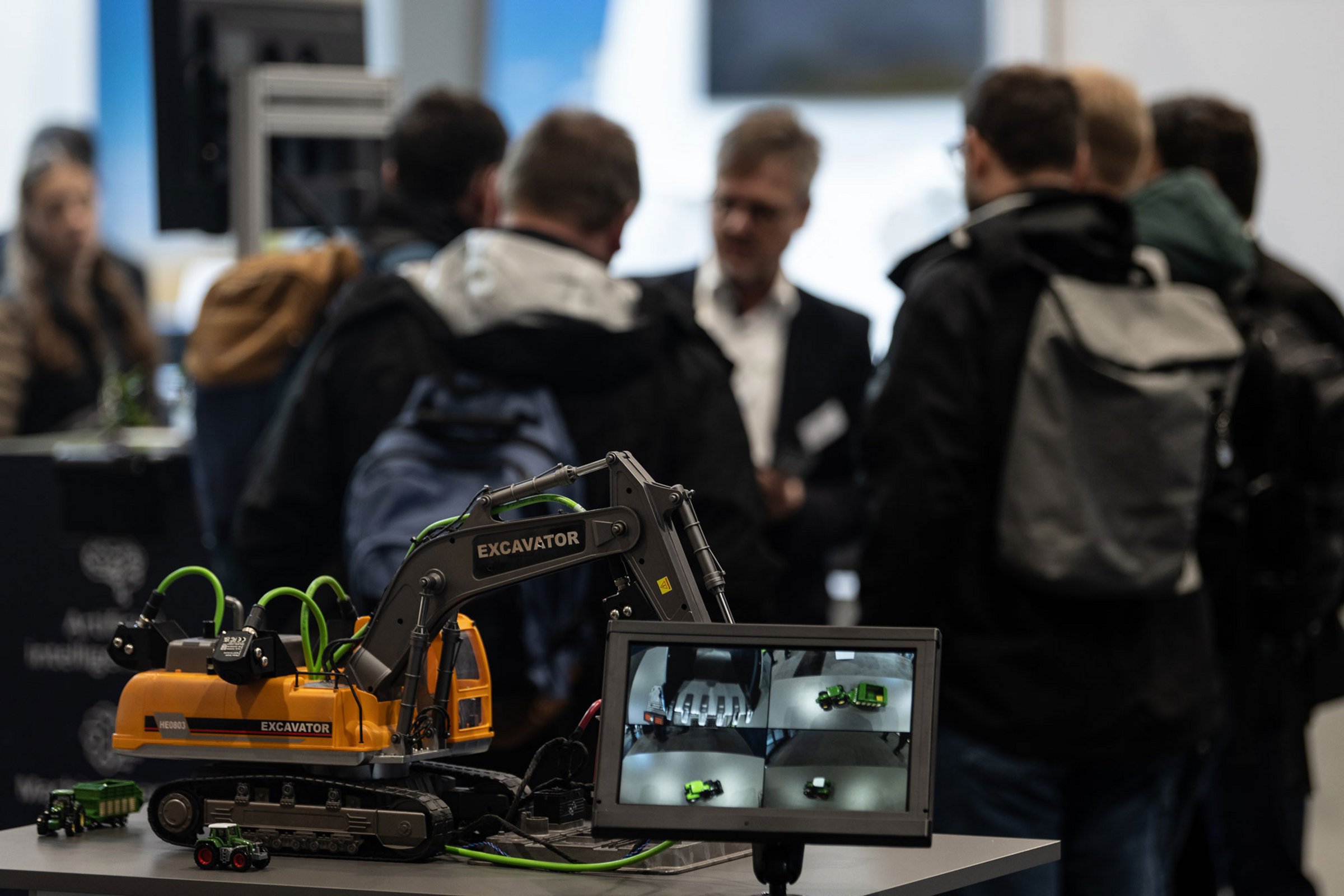

Whether on construction sites or in the field, the demands of automation in the off-highway sector are diverse. To meet these challenges, exhibitors at SYSTEMS & COMPONENTS 2025 are harnessing the full potential of intelligent sensors and camera systems – laying the technological groundwork for autonomous mobile machinery. The assistance functions presented from November 9 to 15 at the Hannover exhibition grounds aim to enhance safety and precision in everyday operations.

When the doors open to this year’s SYSTEMS & COMPONENTS in Hannover, all key players in the off-highway industry are expected to be present. This B2B platform, once again held as part of Agritechnica 2025, focuses entirely on components, systems, and solutions for the mobile working machines of today and tomorrow. One thing is clear: it’s the rapid progress in automation that is currently driving innovation.

“Intelligent networking and digitalization – both inside and outside the machines – are playing an increasingly important role,” confirms Petra Kaiser, Brand Manager of SYSTEMS & COMPONENTS, DLG (German Agricultural Society). Cloud-based networking, wireless machine-to-machine communication, and powerful assistance functions are among the key trends reflected at the Hannover exhibition grounds.

The clear benefits that assistance systems offer are driving manufacturers to integrate smart sensors and camera systems into their agricultural, forestry, construction, and mining machinery.

The resulting solutions meet the growing demand for increased productivity.

Key tasks performed by sensors include environmental detection, assistance functions, motion control, positioning, and functional safety. The latter is a core requirement and an integral part of many developments showcased at SYSTEMS & COMPONENTS.

Flexible sensor modules for assistance systems

But how can we ensure that the movements of the machine are precise? In outdoor operations, robust sensors and cameras are required—ones that can function reliably under harsh environmental conditions such as fog, heavy rain, snow, and dust, while providing operators with an unobstructed all-around view.

To meet these demands, exhibitors offer a wide-ranging portfolio: 3D streaming cameras, LiDAR sensors or laser scanners, inclination sensors, encoders, and inductive proximity sensors. A broad spectrum of technologies is available, all of which can be flexibly and precisely tailored to specific applications and assistance functions.

Additional close-range ultrasonic sensors are also part of the offering. These operate on the echo-sounding principle and are ideal for monitoring the working area of mobile machines. They provide operators in the cab with the necessary feedback to determine the position and orientation of attached tools.

Ultrasonic sensors—such as the UF401V from Baumer—can even suppress temporary disruptions in distance measurement, delivering continuous data even when plant material briefly passes between the sensor and the target surface. This sensor is particularly effective in agricultural applications. Thanks to its short response times, it is well-suited for agile tasks such as detecting the presence of bales, bale wrap, and measuring distances.

The UF401V sensor can also be used for hydraulically operated machine parts that require position feedback. For example, it reliably provides data to precisely detect track widths and sprayer heights.

Greater driving safety through artificial intelligence

Whether it’s rear-area monitoring, work-zone surveillance, or side-area detection—reliable data acquisition is critical. This becomes especially important when it comes to machine vision and AI, as it helps avoid the so-called GIGO effect (Garbage In, Garbage Out), which states that the quality of output data directly depends on the quality of the input data.

As a result, technology providers are increasingly focusing on key areas such as deep learning, 3D recognition, and embedded vision. These technologies enable the next level of development.

Christian Klausner, Director of Product Management at Sensor-Technik Wiedemann (STW), understands the challenges involved: “As long as a human operator is still seated in the vehicle, functional safety is important—but not as critical as it is, for example, in field robotics.”

That’s because these robots use sensor based on AI-based environmental perception that can independently detect when a hazardous situation arises—and take appropriate countermeasures, for example through the actuation systems. Modern 3D LiDAR sensors enable these robots to capture the complex topography of a typical agricultural field. Compared to radar, LiDAR (Light Detection and Ranging) offers the advantage of delivering high-resolution 3D data in real time, even over long distances.

Sensor fusion on the rise

One of the biggest challenges in advancing assistance functions lies in the wide variety of concepts and potential system architectures. While LiDAR was still seen as a competitor to cameras or radar just a few years ago, off-highway experts now agree: the question is no longer „either-or“, but rather “both-and”.

"Sensor fusion combines data from multiple sources. This has the potential to increase reliability. This process will play a bigger role in the future of off highway vehicle systems," says Petra Kaiser from the DLG.

These developments are made possible in part by the latest generation of high-performance processors, which can fuse 2D and 3D data directly. This enables the extraction of information that individual sensors alone cannot provide.

A prime example of this trend is the O3M AI smart sensor system from ifm. It generates a video feed of the surrounding environment while simultaneously displaying precise distance information for all objects and people within a 25-meter radius. This is made possible by combining a 3D PMD sensor with a 2D AI camera into an embedded system for area monitoring. (PMD stands for Photonic Mixer Device.).

What makes it special: the measurement works independently of surface color. By fusing data from two different sensor types on a fully integrated and pre-calibrated platform, the system significantly increases reliability. As the number of false alarms decreases, the efficiency of work processes improves.

The system can continuously differentiate whether an emergency stop, a controlled halt, or a slowdown is appropriate in a given situation. It detects with 10-centimeter accuracy whether a person is in the immediate vicinity—even if they are lying on the ground, wearing dark clothing, or partially obscured by large tools.

Autonomy becomes available for more machines

Sensor-based assistance functions are an important and logical next step toward full automation. They offer a response to one of the biggest challenges in the off-highway sector: the shortage of drivers and skilled workers.

“Our agriculture, construction, and commercial landscaping customers all have work that must get done at certain times of the day and year, yet there is not enough available and skilled labor to do the work,” said Jahmy Hindman, Chief Technology Officer at John Deere. “Autonomy can help address this challenge. That’s why we’re extending our technology stack to enable more machines to operate safely and autonomously in unique and complex environments.”

The second-generation autonomy package introduced by John Deere earlier this year combines advanced image recognition, AI, and cameras to support machines in precision agriculture. Equipped with 16 individual cameras that provide a 360-degree view of the field, it enables the autonomous operation of a 9RX tractor. This allows farmers to step away from the machine and focus on other important tasks.

One possible application scenario is the use of crop protection products in orchards. For this, the narrow-track 5ML tractor has been equipped with the autonomy package and additional LiDAR sensors to account for the lower light conditions in orchards.

A preview of SYSTEMS & COMPONENTS shows: the companies exhibiting in Hanover from November 9 to 15 are already offering various solutions for the vision of fully automated mobile work machines. The continuous development of environmental sensing and multi-camera systems will bring numerous applications for innovative assistance functions in the coming years.